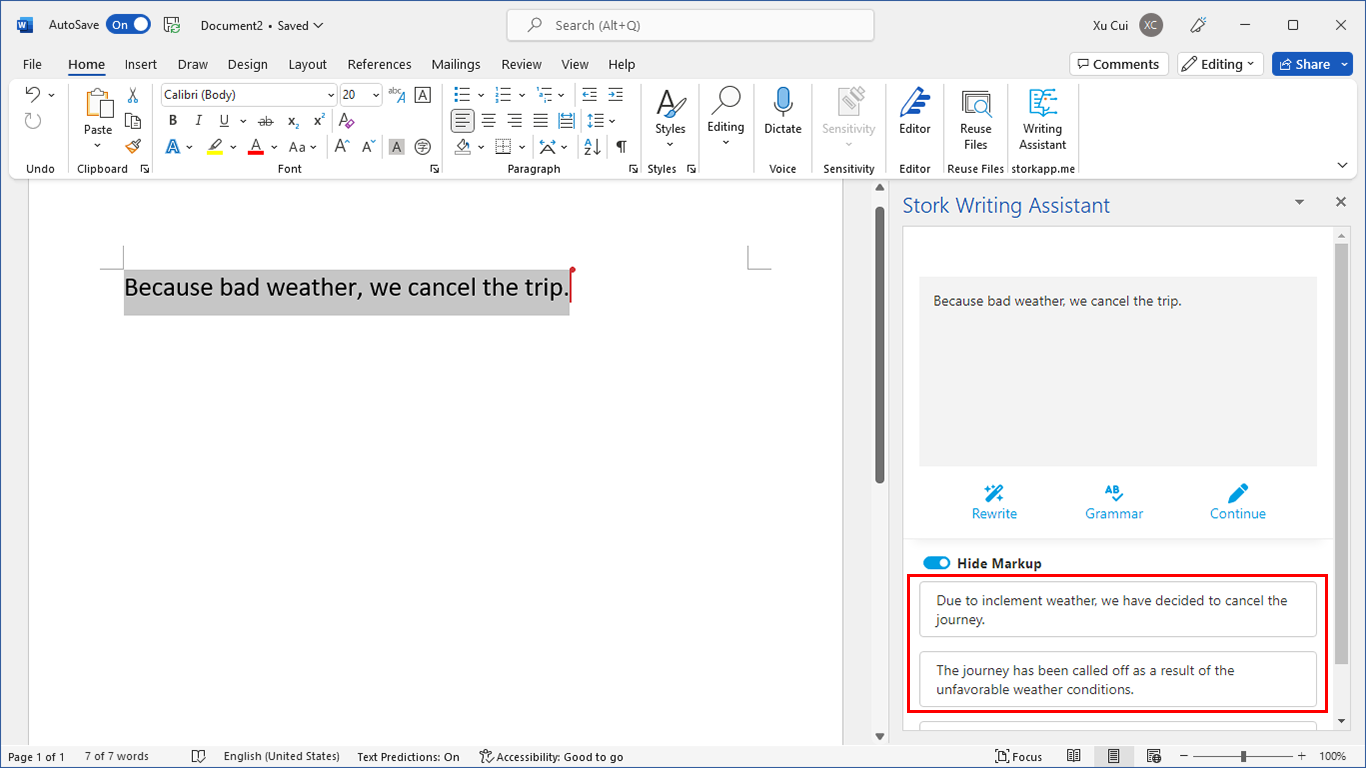

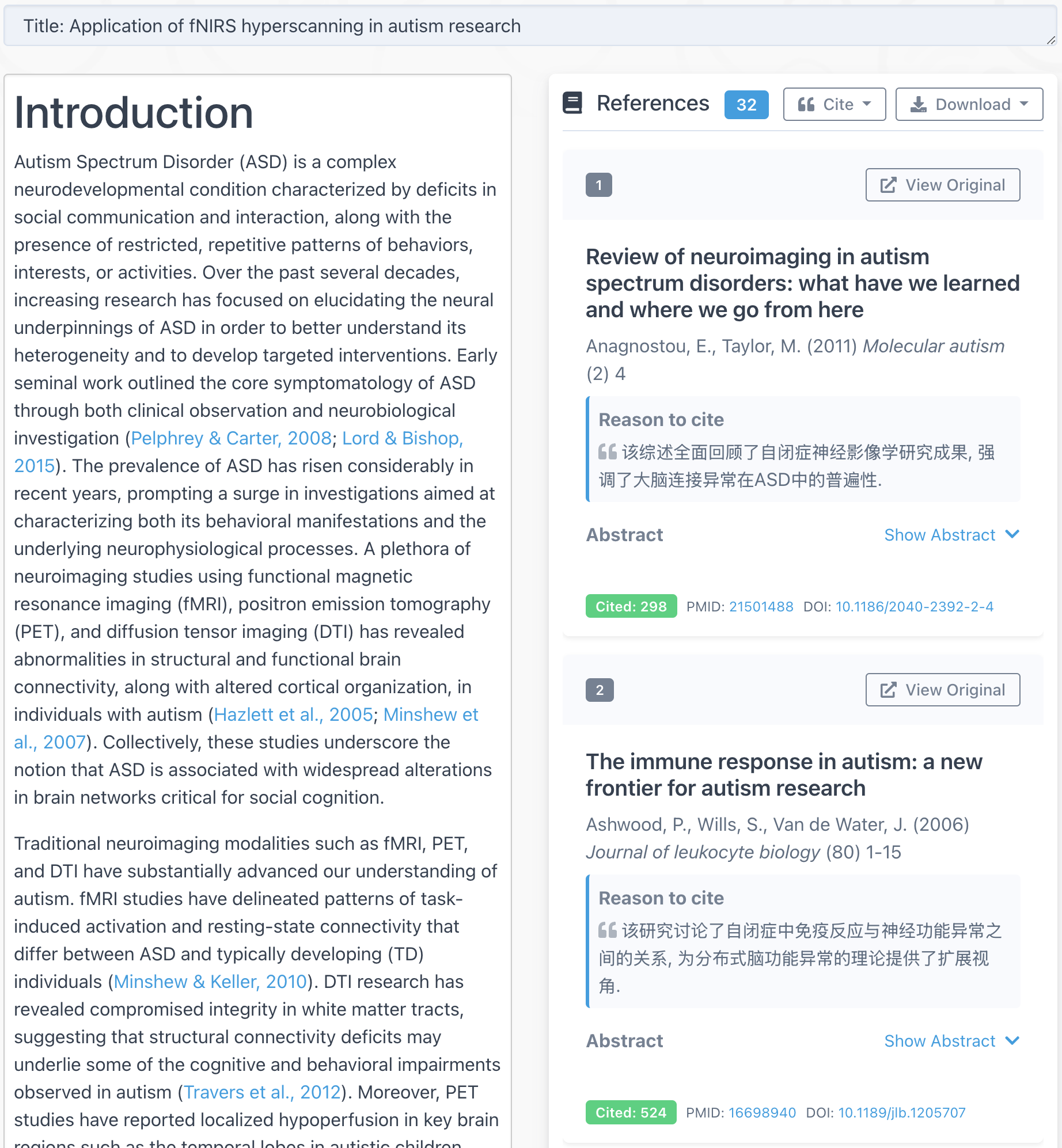

In this class project, I built a network to classify images in the CIFAR-10 dataset. This dataset is freely available.

The dataset contains 60K color images (32×32 pixel) in 10 classes, with 6K images per class.

Here are the classes in the dataset, as well as 10 random images from each:

| airplane |  |

|

|

|

|

|

|

|

|

|

| automobile |  |

|

|

|

|

|

|

|

|

|

| bird |  |

|

|

|

|

|

|

|

|

|

| cat |  |

|

|

|

|

|

|

|

|

|

| deer |  |

|

|

|

|

|

|

|

|

|

| dog |  |

|

|

|

|

|

|

|

|

|

| frog |  |

|

|

|

|

|

|

|

|

|

| horse |  |

|

|

|

|

|

|

|

|

|

| ship |  |

|

|

|

|

|

|

|

|

|

| truck |  |

|

|

|

|

|

|

|

|

|

You can imagine it’s not possible to write down all rules to classify them, so we have to write a program which can learn.

The neural network I created contains 2 hidden layers. The first one is a convolutional layer with max pooling. Then drop out 70% of the connections. The second layer is a fully connected layer with 384 neurons.

def conv_net(x, keep_prob):

"""

Create a convolutional neural network model

: x: Placeholder tensor that holds image data.

: keep_prob: Placeholder tensor that hold dropout keep probability.

: return: Tensor that represents logits

"""

# TODO: Apply 1, 2, or 3 Convolution and Max Pool layers

# Play around with different number of outputs, kernel size and stride

# Function Definition from Above:

# conv2d_maxpool(x_tensor, conv_num_outputs, conv_ksize, conv_strides, pool_ksize, pool_strides)

model = conv2d_maxpool(x, conv_num_outputs=18, conv_ksize=(4,4), conv_strides=(1,1), pool_ksize=(8,8), pool_strides=(1,1))

model = tf.nn.dropout(model, keep_prob)

# TODO: Apply a Flatten Layer

# Function Definition from Above:

# flatten(x_tensor)

model = flatten(model)

# TODO: Apply 1, 2, or 3 Fully Connected Layers

# Play around with different number of outputs

# Function Definition from Above:

# fully_conn(x_tensor, num_outputs)

model = fully_conn(model,384)

model = tf.nn.dropout(model, keep_prob)

# TODO: Apply an Output Layer

# Set this to the number of classes

# Function Definition from Above:

# output(x_tensor, num_outputs)

model = output(model,10)

# TODO: return output

return model

Then I trained this network using Amazon AWS g2.2xlarge instance. This instance has GPU which is much faster for deep learning (than CPU). I did a simple experiment and find GPU is at least 3 times faster than CPU:

if all layers in gpu: 14 seconds to run 4 epochs,

if conv layer in cpu, other gpu, 36 seconds to run 4 epochs

This is apparently a very crude comparison but GPU is definitely much faster than CPU (at least the ones in AWS g2.2xlarge, cost: $0.65/hour)

Eventually I got ~70% accuracy on the test data, much better than random guess (10%). The time to train the model is ~30 minutes.

You can find my entire code at:

https://www.alivelearn.net/deeplearning/dlnd_image_classification_submission2.html

Helpful post. Can you explain your motivation behind using standard deviation on 0.1 while initializing the weights. My network does not learn if i keep the standard deviation to 1. Only when i saw your post and fine tuned my standard deviation to 0.1, it started training. i would like to understand how did you choose the standard deviation of 0.1 🙂

Can you explain how you arrived at the values below?

model = fully_conn(model,384)

#model = fully_conn(model,200)

#model = fully_conn(model,20)