TL; DR

A Nature survey of over 5,000 researchers shows that most people accept using AI tools for translation, proofreading, and even writing the first draft—if disclosed. But using AI for peer review is widely frowned upon. Transparency is key.

AI writing tools are changing the way researchers work—faster drafts, better grammar, smoother translations. But some big questions remain:

📍 How much AI is too much?

📍 Is using AI in academic writing ethical?

📍 Should authors always disclose their use of AI?

A recent Nature news feature, titled "Is it OK for AI to write science papers? Nature survey shows researchers are split" (https://www.nature.com/articles/d41586-025-01463-8), provides valuable insights. Based on responses from more than 5,000 researchers across disciplines and career stages, the survey explored how AI is being used and what researchers think about it.

Overwhelming support for editing and translation

More than 90% of respondents said they were comfortable using generative AI to edit or translate their scientific writing. This includes improving grammar, clarity or structure, especially for those who are not native English speakers.

"I don't think it's any different than asking a colleague who is a native English speaker to read and revise your text," one respondent noted.

Two-thirds are OK with drafting—cautiously

Roughly two-thirds of researchers said it was acceptable to use AI to help write manuscripts, such as generating first drafts or summarizing content.

But the support was less enthusiastic than for translation and editing. Many researchers emphasized that AI should assist, not replace, the author’s thinking and expertise.

One respondent put it bluntly: "You can use AI to help you write your paper, but not to think for you."

Most researchers reject AI-written peer reviews

When it comes to peer review, attitudes shift.

More than 50% of respondents disapproved of using AI to write referee reports, citing concerns about confidentiality and scholarly responsibility.

Should you disclose AI use?

This was one of the most debated questions in the survey.

While many felt that basic editing or translation didn't require disclosure, most agreed that using AI to generate content—such as paragraphs or entire drafts—should be disclosed.

Journal policies vary widely:

📍 Lenient: Journals like Springer Nature and IOP Publishing say that light editing doesn't require disclosure, as long as the authors take responsibility.

📍 Moderate: Wiley suggests that if AI is used to generate or rewrite text, authors should include a note in the manuscript.

📍 Strict: The JAMA journals require authors to specify which AI tools were used, how they were used, and to take full responsibility for any AI-generated content.

"It’s like disclosing a conflict of interest," said one journal editor. "It’s not that we mind if you use AI—we mind if you don’t tell us."

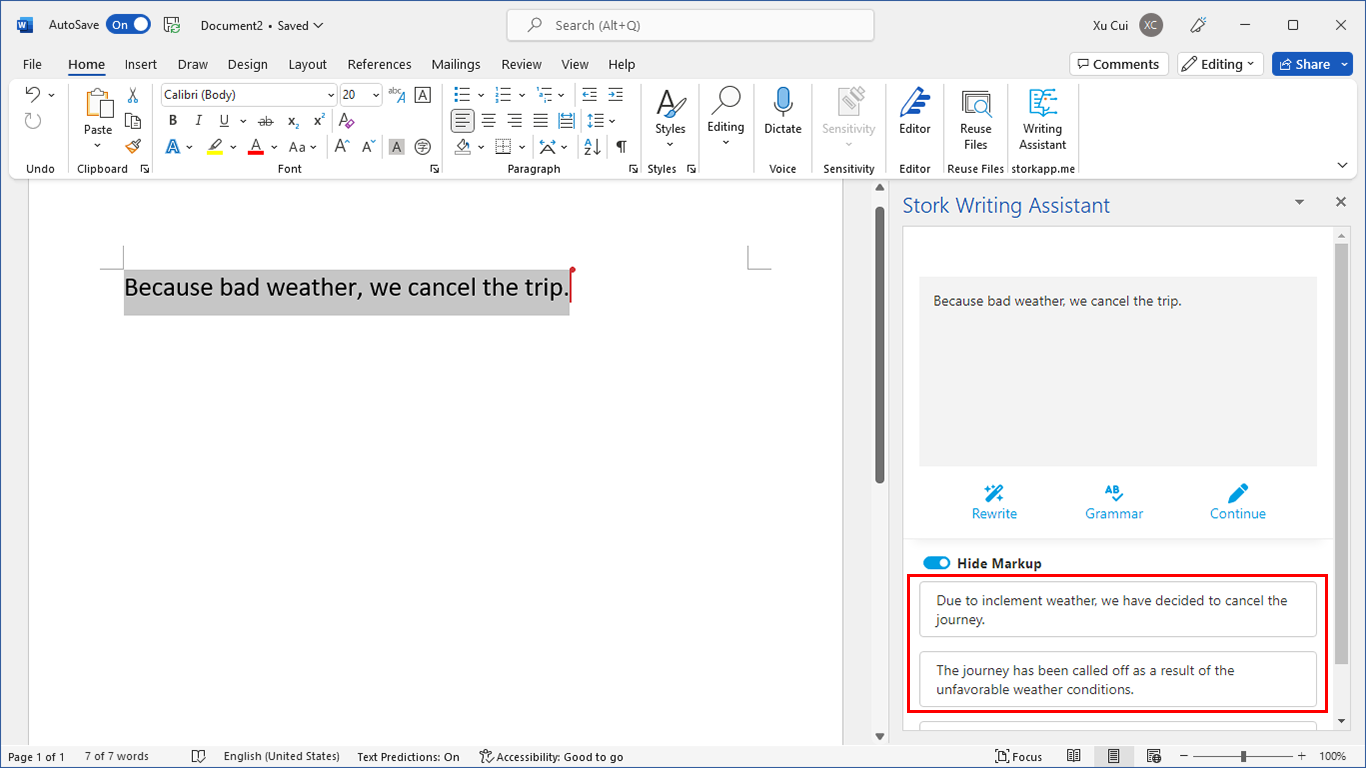

Are Stork's AI Tools Still Safe to Use?

Definitely—and wisely. Stork's AI tools are here to help researchers work more efficiently.

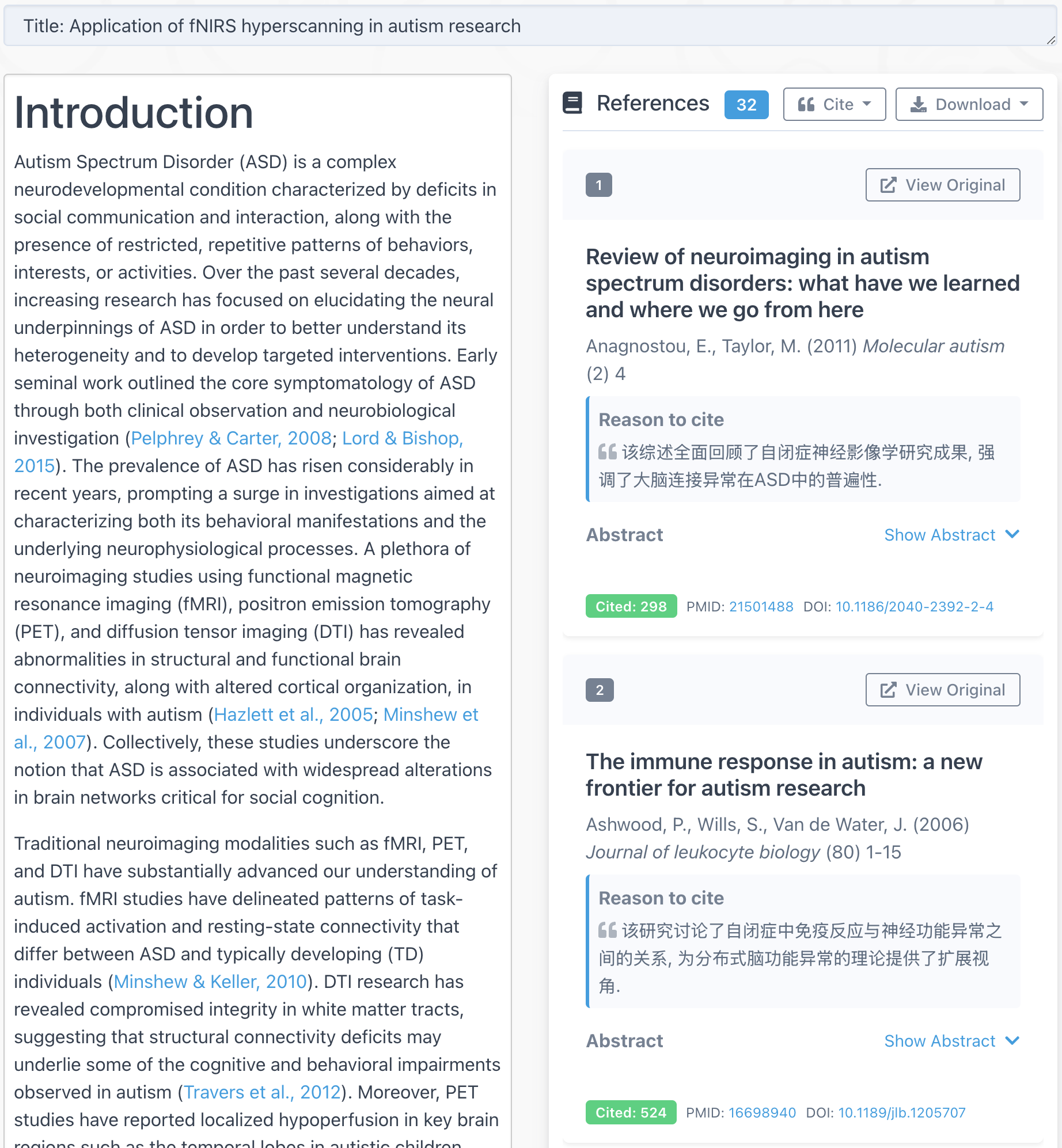

📍For drafting with For Writing Assistant and Translation: They can be incredibly helpful—especially for researchers writing in a second language. In fact, over 40 published papers have used Writing Assistant.

📍 For drafting with AI paper: It can be a great starting point. But you should personally review, verify, and revise the draft AI generates.

📍 Before submission: Always check the journal's AI use policy. When in doubt, disclose AI involvement.

The academic world is still figuring out how best to use AI in research. Surveys like Nature's are just the beginning.

How do you use AI in your writing process? Please let us know.