AdaBoost is an algorithm to linearly combine many classifiers and form a much better classifier. It has been widely used in computer vision (e.g. identify faces in a picture or movie). Look at the following example:

How it works?

First, you have a training dataset and pool of classifiers. Each classifier does a poor job in correctly classifying the datasets (they are usually called weak classifier). Then you go through the pool and find the one which does the best job (minimizing classification error). You then increase the weight of the samples which are wrongly classified (so the next classifier has to work better on these samples). Then you go through the pool again. Formally, it is (click to see a larger view):

(From http://cmp.felk.cvut.cz/~sochmj1/adaboost_talk.pdf)

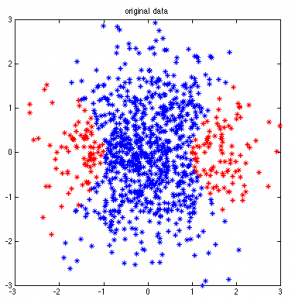

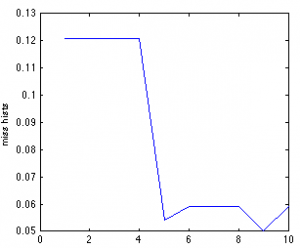

Now let’s try it. In the following example, our data is nonlinearly separated. In our pool of weak classifiers, they are all dummy (can only classify with a single threshold on a single data dimension). We repeat 10 times (i.e. find 10 weak classifiers) and combine them to form a super strong classifier.

Original dataset:

Better and better super (strong) classifier when more and more weak classifiers are incorporated (click to see a larger view). t means the number of weak classifiers included.

The error rate is decreasing as more and more weak classifiers are included:

Here is the source code:

example.m example

weakLearner.m weaklearner

Excellent tutorial to learn about adaboost classifier. Thanks a lot!

This is the best explanation of Adaboost I found on the web. Looking at this and after understanding adaboost, I implemented it in Python and used in my project. Thank you very much!

Very nice and helpful tutorial.But it would be lot more attractive and easy to understanding the basics if you will explain each and every step of the algorithm with reasons of doing that step..

can you give me the adaboost code for training and detection.

thank you!

@jason

The code is attached in this post.

Mr.Xu Cui, can you explain about Zt?

thank you

@hani

Zt is the normalization factor, making sure the sum of D is 1

How can we get Zt? which D you refer to in “sum of D is 1”, Dt(i) or Dt+1(i)? and what happen if sum of D is less or more than 1?

Thank you.

@Xu Cui How can we get Zt? which D you refer to in “sum of D is 1″, Dt(i) or Dt+1(i)? and what happen if sum of D is less or more than 1?

Thank you.

@hani

D is sum over i at each time step. You may refer to the code

Thank you for your excellent adaboost tutorial.

I would appreciate if it possible for you to send me multiclass Adaboost Matlab code.

yours faithfully.

@Mohammad

Mohammad, I don’t have multiclass adaboost code.

Thanks for the post and the code, it’s helping me a lot! I’m having trouble with some bits, though. It seems that for one instance of t you are training 244 classifiers and pick the one with the lowest error. Each of these temporary classifiers has attributes threshold, dimension and direction. These directions, can those be interpreted as class labels? The threshold, is that a horizontal decision boundary?

I’m also curious about the dimension variable. It looks like x has 2 features (so for example weight and height). When searching for a classifier, you only consider 1 dimension at a time? I haven’t encountered this in the pseudo-code in the scientific literature (which leaves out many details, so I’m not implying you are doing something wrong). I’m trying to work towards boosting with SVR as a base learner with continuous data, so training on only one dimension seems troublesome for my goal.

Isn’t it true that Adaboost doesn’t actually create a “SINGLE STRONG CLASSIFIER” but uses a set of weak classifiers to achieve better performance in the testing phase after assigning weights in the training phase.

I’ve read that Adaboost also picks best features apart from weighting weak classifiers. How does Adaboost pick best features from the data.

@Rahul

The single, strong classifier consists of several weak classifiers. It takes the predictions from the weak ones and then uses some method (such as taking the mean or median) to take the separate predictions and output one single prediction.

@Rahul

You are mixing two different things here. The weak classifiers are weighted based on the error (expressed as alpha, you can look it up in the code).

The datapoints are weighted, as to put more emphasis on the datapoints that are difficult to classify. This is done by changing weight matrix D (also see code).

Is AdaBoost used only for learning/training? Can AdaBoost used for testing new case (from formula above,to find E we must sum Dt(i) which yi!=hj(xi))? If adaBoost in only used for learning sample, how can we get the prediction for new case or new data ?

Excellent tutorial about adaboost classifier.Thank you

but I have a question, can I use SVM classifier instead of weaklearner?? if yes, could you please help me with this?

Thank you

@rima

The weak learner can be anything, including SVM.

@Xu Cui

so can you please help me to use libsvm as my weaklearner?

@rima

Sure. Are there any specific questions I can answer?

as you know the function svmtrain of libsvm (my weaklearner in this case) has this structure: [model]=svmtrain(label,data). and the model is a struct with 10 fileds. so how I am supposed to deal with this structure to be able to run the exemple knowing that the weaklearner above don’t have the same input and output of the function svmtrain??! Hope that I was clear

@rima

What you could do is, use svmtrain to get a model, then use that model and svmpredict in the weaklearner.

Besides svm, what are the other weaker learners?

@Xu Cui

I will use olnly svm

@Xu Cui

Do you have an idea please how to use svmpredict and the model in the weaklearner??

Mr.Xu Cui, can we use ADABOOST to improve the performance of Adaptive filtering technique (LMS)?

If YES can you explain the code how to append the adaboost & LMS so that the robustness can be improved.

@rima: svmpredict returns set of predicted labels. Then you need to calculate error(t) as described in the algorithm and update weights. Do it until the number of iterations is reached or et is more than 1/2.

@Xu Cui: whats the motivation to use thresholds (-3 to 3)? In the code you mention that you are using 244 weak classifiers? But in the article you mention you are using T=10 weak classifiers?

@Rahul

That’s because the data range is from -3 to 3.

The pool of weak classifiers contains 244 weak classifiers. Then one by one we choose 10 weak classifiers to include in our strong classifier. The criterion to choose a week classifier is to see if it is the best classifier (among 244) that correctly classify the data.

@Xu Cui,

Thank you very much for the tutorial, I would like to ask for your advise in programing adaboost algorithm for two classes. I had used ionosphere data set from the uci repository. Also I’m using subtractive clustering as my weak learner. After a number of iteration the error resulted from the weak classifier’s is greater than 0.5 and no matter what value of the raduis of the cluster I gave. I thought that using clustering on only two classes data set will have an output which is better than random guessing but now I’m feeling confused about it.

Can you advise me in this matter please.

Thank you in advance

Hi

I have the same problem as Rima had. I want to use SVM as my weak classifier and then adaboost. Can you please let me know how I can use your code for adaboost. I am confused. Thanks a lot

I need to implement the RUSboost algorithm fully in MATLAB and I am stuck with the Weak Learner part. I want to simply use SVM as my Weak Learner but not sure about the parameters that should be passed in the svmtrain and the svmclassify function. To be more specific, two commands are used for training and predicting in the SVM,

svmStruct=svmtrain(train_data,train_label,’kernel_function’,’rbf’);

PredictedLabel=svmclassify(svmStruct, test_data);

As per the algorithm, it says that the undersampled data and the weights W (which are initialized to be all same) should be used for training the weak Learner. So, my question is how should I introduce the weights in my SVM.?

Dear Xu Cui,

How can i use AdaBoost algorithm in JAVA OR C++ for object recognition..?

object can be a Symbol or anything….

Thanks in Advance.

@Ashish

Unfortunately I am not familiar with java/c++.

tell me in any programming language you know…

thanks in advance

Other Adaboost talk about “iterations” (100 or so). How do I relate “iterations” in this explanation of Adaboost?

Dear Xu Cui,

can u please tell me , in your above algorithm , what is (x1, y1) to (xm,ym).. also what is the value of m , how it is calculated?

@chen

can u please tell me , in your above algorithm , what is (x1, y1) to (xm,ym).. also what is the value of m , how it is calculated?

i go throuh the whole algo and i understood it. But how I it is used for face detection?

Reply would be appreciable

Regards,

Ashish Kumar

@Ashish

Unfortunately I don’t know. I read somewhere that they use addboost for face detection.

hi

thanks a lot for this proje.I work on adaboost algoritm and signals.can you help me about this.

thanks

Thank you Mr. Xu Cui for this great explanation of the AdaBoost. I’ve read pretty much most of all the articles about the Adaboost and i still couldn’t understand one step pf this algorithms. I have been working with classifiers like naive Bayes, nearest neighbor, Bayesian linear and quadratic classifiers. As you may know, these classifiers works with training samples so they could calculate their decision then predict the label of the test samples. But i have noticed with the adaboost that there is no need for training and testing samples (correct me if i’m wrong). Can i work with one of these classifiers as my weak learner ?? and in this case how can I train the weak classifiers using Dt in each iteration ?

Thanks for advance

@Wissal

In principle you can use part of your data as training, then you get a strong classifier. Use this strong classifier to test the remaining data.

Yes, you can use one (or more) of your classifiers as weak learner. You do not “train” your weak classifier, instead you pick the best weak classifier. For example, if you use quadratic classifier, you probably have parameters you can change. Each parameter value corresponds to a weak classifier.

how can this code be used on a database which has palm images?

I need it for my project in palmprint recognition

Hi,if we want to add count of features, which part of algorithm will be change?

my mean is, instead of 2 feature :weight and age, we added more than 2.

Thanks very much for your explanation. It’s pretty good.

But it could be used for face recognition?

If yes, i am curious at accuracy it can reach.

It could be over than PCA + LDA, or various kernel methods?

@cheer

cheer, face recognition is unfortunately some area I am not familiar with. But apparently adboost has been used in this area based on search. If you know the answer please let us know.

@parmin

You can add many features …

Does it look like a good thing to try to boost several already “strong” classifiers of different types? For example, to combine SVM, kNN and some neural networks classifiers? I am going to try this anyway but maybe there is some information about such a thing? Thanks!

I never tried but I do think it’s a great idea. Do you mind letting me know how much improvement you can get?

I do not understand the meaning of threshold, dimension and direction, neither how their values are chosen.

Any clue for a beginner?

Hello Dirk-Jan Kroon,

Can you please let me know how can I use neural network as weak classifier? .Can you tell me how to put the Adaboost algorithm connect with the neural network ?

Thanks

This is the best explanation of Adaboost. thank you. ^^

Hi Mr Xu,

Thank you very much for your very helpfull code. Can you please explain me a point. I’m working on adaboost in the aim of selecting best features. So I use the tmph struct to obtain the dimentions of the best features (tmph.dim = kk). But I have a confusion when I found tmph.pos =1 and sometimes tmph.pos =-1 . My question is: by -1 do you want to say that those features are the best features representing the first classe and features having as position 1 are the ones representing the second classe???

Or do you want to say that all selected features (pos=1 and pos=-1) are all representing the 1rst classe. (the discrimination of the two classes)

I will very gratuful if you respond on my question.

Thank you in advance

Hi Mr Xu,

Thank you very much for your very helpful code..is there any provision that instead of taking Training data as 1000 samples 2D …an text file or xls or csv file of datasets can be attached to code and get the outputs..

I will very gratuful if you respond on my question

@Yogesh

I do not know if there is such package …

Hi Xu, firts i want to thank for the code, it’s been a great help for me. My question is after the algorithm is trained, in what point of the code, i can enter the testing data? @Xu Cui

@Ruben Dario

You can put your data to “data” variable below:

finalLabel = 0;

for t=1:length(alpha)

finalLabel = finalLabel + alpha(t) * weakLearner(h{t}, data);

end

tfinalLabel = sign(finalLabel);

Hi Xu,

Thank you very much for your useful code.

I would be grateful if you could put a code for multiclass adaboost.

Hi Mr Xu Cui

Thank you for your useful tutorial.

It really helped me.

I have a question here. I dont get what “position” does in the code.

I know it is used in classification part by weak learner, but I dont know the ‘use’ and why it’s fixed to -1 and 1.

thank you, again

how do you find the normalisation factor

label = double((data(:,1).^2 – data(:,2).^2)<1); % a nonlinear separation example

%label = double((data(:,1).^2 + data(:,2).^2)<1); % a nonlinear separation example

pos1 = find(label == 1);

pos2 = find(label == 0);

label(pos2) = -1;

% plot training data

figure(‘color’,’w’);plot(data(pos1,1), data(pos1,2), ‘b*’)

hold on;plot(data(pos2,1), data(pos2,2), ‘r*’)

xlim([-3 3])

ylim([-3 3])

title(‘original data’)

axis square

% addBoost

T = 10;

D = ones(size(label))/length(label);

h = {};

alpha = [];

for t=1:T

err(t) = inf;

[out tree acc er] = weaktree(data,label);

tmpe = sum(D.*(weaktree(data,label)~= label));

if( tmpe = 1/2)

disp(‘stop b/c err>1/2’)

break;

end

alpha(t) = 1/2 * log((1-err(t))/err(t));

D = D.* exp(-alpha(t).*label.* weaktree2(h{t}, data));

D = D./sum(D);

end

stop b/c err>1/2

I want to implement Adaboost.M1 but I don’t understand arcmax and I cannot coding, please show the example Adaboost.M1. Thank you

hi

Can you please let me know how can I use svm as weak classifier?

hi, thanks for your tutorial.

i have a problem in using svm as a weak learner.

i have no idea. i need to guide me how to use another learner.

im gonna use svm as weak learner. but i dont know how.

please help me.

i would appreciate if you reply me by email.

this is my email address :[email protected]

please guide me.

best regards

m. norizadeh